The curious thing that a number of researchers Google Home, Siri and Alexa have been hacked by projecting laser light in them it is not the news itself, which has its own thing, but it is still not known for sure because these assistants respond to light as if it were sound.

We talk about these three assistants are vulnerable to attack they suffer from lasers that "inject" inaudible and sometimes invisible light commands into devices to cause doors to unlock, visit websites or even locate vehicles.

Assistant, Siri and Alexa hacked

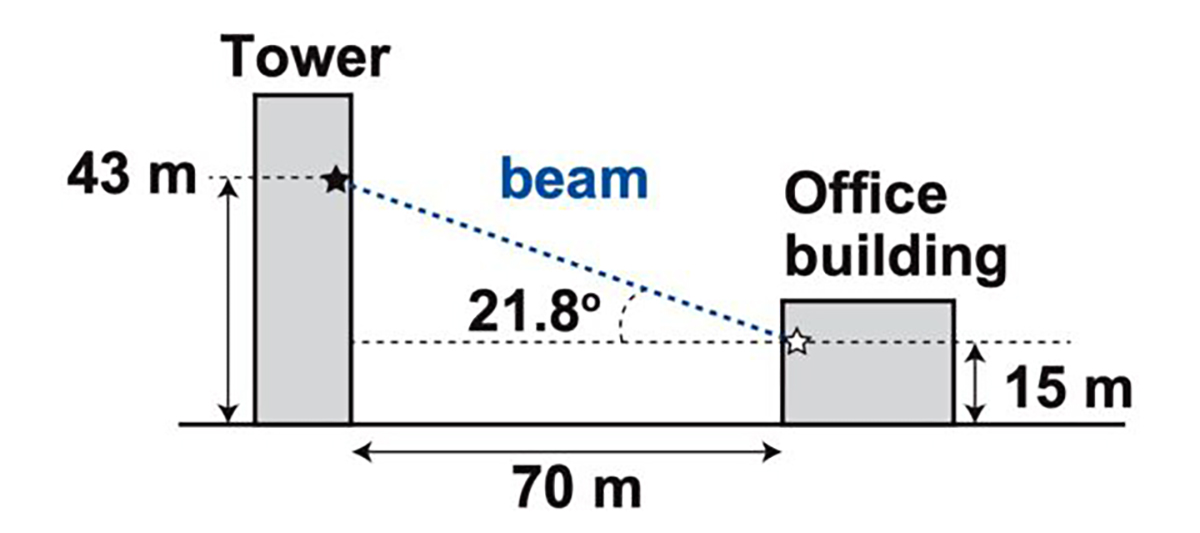

And it is at a distance of 110 meters from which you can project low-frequency laser light to activate those voice systems in which commands are "injected" that then carry out a wide variety of actions. These researchers have even been able to send these types of light commands from one building to another and go through the glass to reach the device with Google Assistant or Siri.

As far as we can tell, the attack takes advantage of an existing vulnerability in the microphones and that use what is called as MEMS (micro-electro-mechanical systems). Microscopic MEMS components unintentionally respond to light as sound.

And while researchers have tested this type of attack on Google Assistant, Siri, Alexa, such as even on the Facebook portal and a small series of tablets and phones, they begin to believe that all devices that use MEMS microphones are susceptible to being attacked by these so-called «Light Commands».

A new form of attack

These types of attacks have a number of limitations. The first is that the attacker must have a direct line of sight to the device whom you want to attack. The second is that the light has to be focused very precisely on a very specific part of the microphone. Also keep in mind that unless the attacker used an infrared laser, the light would be easily seen by anyone close to the device.

The findings of this series of researchers are quite important for a few reasons. Not only because of the fact of being able to attack this series of voice-controlled devices that in a home manage some important devices, but also shows how attacks can be performed in almost real environments.

What draws a lot of attention is that the reason for the "physical" is not fully understood of the light commands that become part of the "exploit" or vulnerability. In fact, really knowing why it happens would mean greater control and damage over the attack.

It is also striking that this series of voice-controlled devices do not carry some kind of password or PIN requirement. That is, if you are able to "hack" them with this type of light emission, you can control an entire home in which a Google Assistant or Siri controls the lights, the thermostat, door locks and more. Almost like a movie.

Low cost attack

And we could imagine that having a laser light projector can cost you. Or that at least the cost was high to be able to perpetrate an attack of this style. Not at all. One of the settings for attacks involves an expense of $ 390 with the acquisition of a laser pointer, a laser driver and a sound amplifier. If we already get more foodies, we add a telephoto lens for $ 199 and we can aim at greater distances.

You may wonder who the researchers are behind this surprising finding that shows a new way of attacking a series of devices that we are all putting into our homes. Well we talk about Takeshi Sugawara from the University of Electro-Communications in Japan, and Sara Rampazzi, Benjamin Cyr, Daniel Genkin, and Kevin Fu of the University of Michigan. In other words, if at some point you thought we were talking about fantasy, not at all, a reality.

Una new attack for Google Assistant, Siri and Alexa voice-operated devices with light commands and that will have a tail in the coming years; a Google Assistant that integrates with WhatsApp, so imagine a little what can be done with those attacks.